Introduction

PACIFIC (ProAactive ConversatIonal question answering in FInanCe) is a large-scale conversational question answering (CQA) dataset, aiming to stimulate progress of proactive CQA research over more complex and realistic tabular and textual data, especially those requiring numerical reasoning.

The PACIFIC dataset is built from the TAT-QA dataset by using its question-answer pairs as guidance for constructing conversation sessions. Compared with existing datasets, PACIFIC exhibits three key challenges:

- Proactivity: The system needs to proactively assist the user to clarify their question intent by asking clarifying questions;

- Numerical Reasoning: There are a large number of questions that require numerical reasoning to answer;

- Hybrid Context: The context given is hybrid, comprising a semi-structured table and at least two relevant paragraphs that describe, analyze or complement the table.

In total, PACIFIC contains 2,757 dialogues associated with 19,008 QA turns.

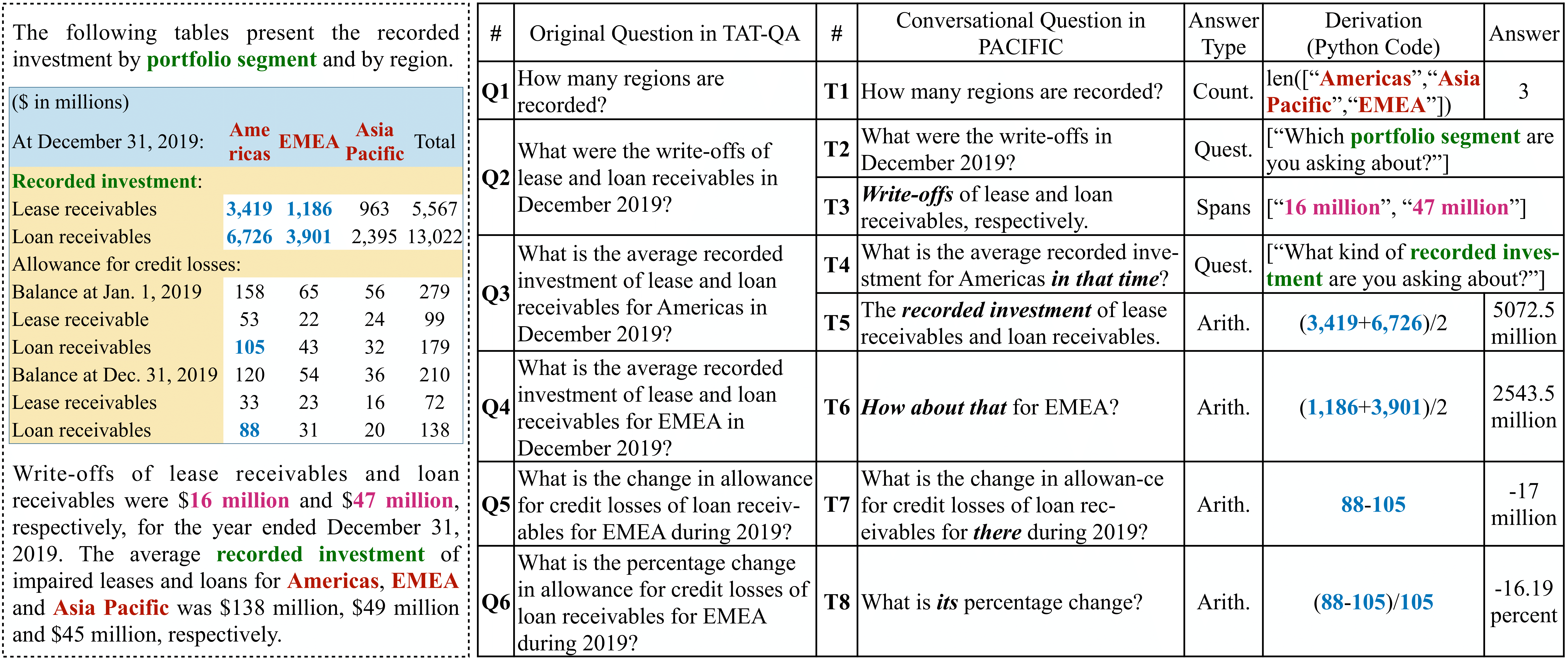

The following is an example of PACIFIC and the corresponding example from TAT-QA. The left dashed line box shows a hybrid context.

For more information, please read our EMNLP 2022 paper [PDF].